Earlier this week, an artificial intelligence detector notified Baltimore police of a teenager wielding a gun on a high school campus. However, as police apprehended the suspect and secured the scene, they only found the teenager with a bag of chips.

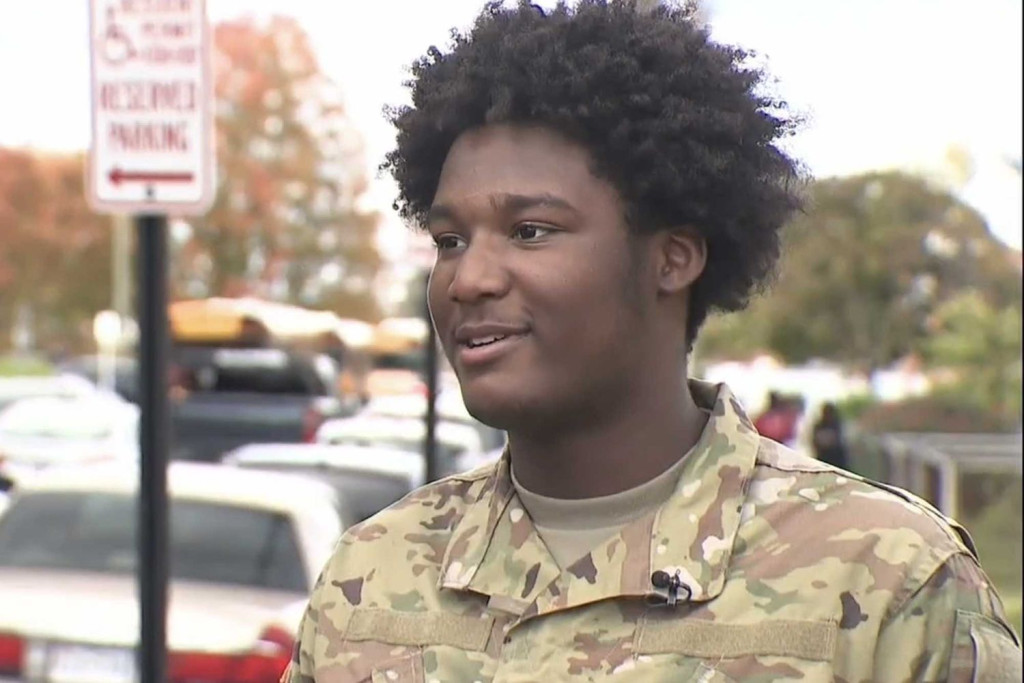

Last Monday, Taki Allen was sitting outside Kenwood High School after football practice when several police officers approached him with their guns drawn.

After cuffing and searching him, officers discovered that the AI system designed to detect armed individuals had mistakenly identified his bag of Doritos as a weapon.

AI mistaken chips for a gun:

— Cyrus (@Cyrus_In_The_X) October 24, 2025

In Baltimore, police handcuffed 16-year-old Taki Allen after an AI weapon-detection system flagged his bag of Doritos as a firearm.

Eight patrol cars arrived before officers realized it was a false alarm.#AI #Police #Baltimore #Technology #News pic.twitter.com/vSJVMFVX8N

“It was like eight cop cars that came pulling up for us,” Allen told WBAL-TV 11, “At first, I didn’t know where they were going until they started walking toward me with guns, talking about, ‘Get on the ground,’ and I was like, ‘What?'”

Police informed Allen that, according to the computer’s estimation, the bag of chips he had finished and crumpled in his pocket resembled a gun, and they showed him the image that triggered the detector.

“I was just holding a Doritos bag – it was two hands and one finger out, and they said it looked like a gun.”

Following coverage of the event, the school’s principal sent a letter to Allen’s parents to provide details in a rather fascinating retelling:

“I am writing to provide information on an incident that occurred last night on school property. At approximately 7 p.m., school administration received an alert that an individual on school grounds may have been in possession of a weapon. The Department of School Safety and Security quickly reviewed and canceled the initial alert after confirming there was no weapon. I contacted our school resource officer (SRO) and reported the matter to him, and he contacted the local precinct for additional support. Police officers responded to the school, searched the individual and quickly confirmed that they were not in possession of any weapons. We understand how upsetting this was for the individual that was searched as well as the other students who witnessed the incident…”

Notice that the letter states the Department of School Safety “reviewed and canceled” the alert. Yet police were still dispatched to the school grounds. If it had already been determined that the AI was wrong, why did the investigation proceed at all?

I’m sure Allen’s family will have a number of questions for both the school and the police regarding proper procedures in cases of false alerts. And, of course, the entire ordeal raises the question of whether artificial intelligence is capable of handling matters like this.

We know how much more tragic this situation could have been. Having police on school campuses is already a tenuous issue, but when you add AI surveillance that could lead to life-or-death situations, it deserves even greater scrutiny.

Is this technology creating safer communities, or is it simply reinforcing the status quo when it comes to race and criminality? I’m sure Taki Allen and his family would argue the latter.